The message arrived late on a Friday evening, I was to join 3000 participants globally in Runway's fourth Gen48 createathon—48 hours to leverage unlimited credits and explore near limitless creative potential through their generative video platform empowered by AI.

For someone growing up on hand drawn animation, black and white film (the kind where you took your photos to a dark room or the Kodak store to process) this version of the future is unimaginable. My birth home is Kenya, a magical land in many ways - high-end Disney level animation tooling and studios hasn't historically been one of them. So to have an open door invitation into a world that so often seemed completely and utterly out of reach was mind-blowing to me - invitation accepted!

Magic Behind the Curtain

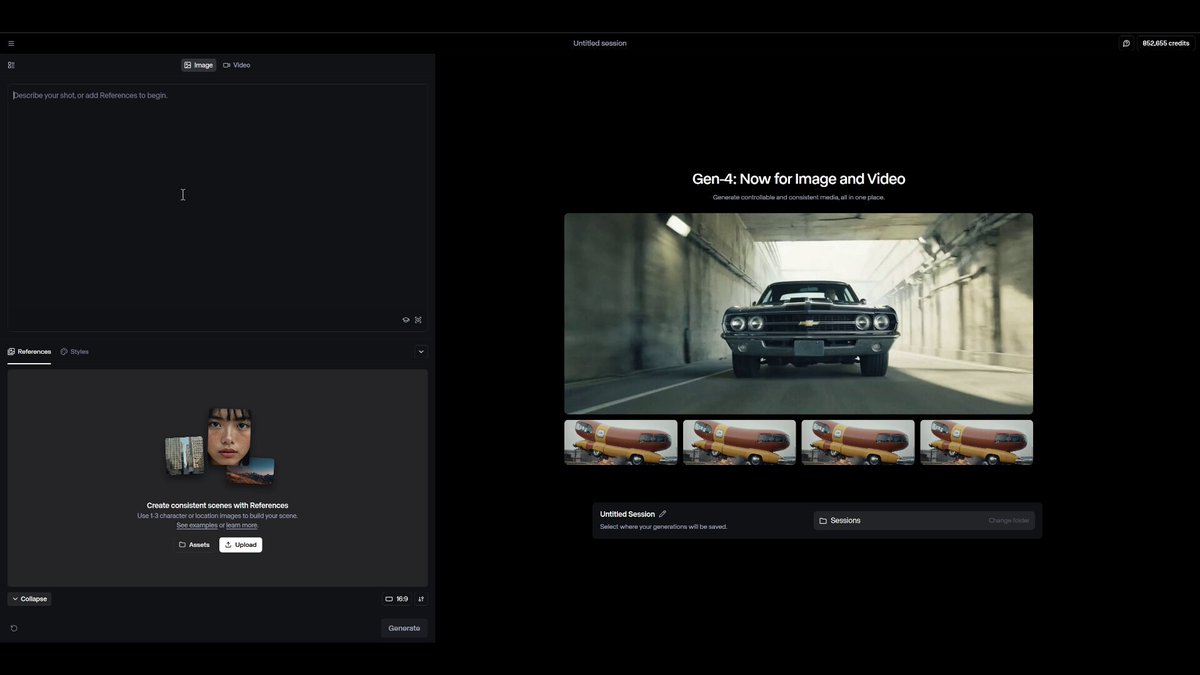

Runway ML isn't just another AI startup. Born in 2018 in New York City, it's not so quietly been revolutionising creative expression, building tools that put Hollywood-level production capabilities into the hands of, well, anyone with an internet connection.

Their arsenal includes a series of powerful AI models - Gen-1, Gen-2, Gen-3 Alpha, and the newly minted Gen-4. Each iteration has pushed the boundaries of what's possible, moving from simple video transformations to the ability to generate completely original footage from just text or images.

What makes Runway special, especially for a simple boy from Nairobi, is that it doesn't care about your credentials or connections. The platform strips away the gatekeeping that's defined creative industries for decades. No film school degree? No problem. No access to expensive equipment? Doesn't matter. No industry contacts? Irrelevant.

We all have access to the same technology that helped bring "Everything Everywhere All At Once" to life - an Oscar-winning film that broke all the rules. That's been embraced by musicians from A$AP Rocky to Kanye West. Creating the potential for those further down the learning curve to participate, whilst offering an even more expansive set of possibilities to those at the frontier.

47H to go: Drawing From The Well

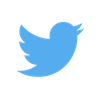

With the clock ticking, I turned to a collection of photographs taken by the brilliant Tom Morgan. A supremely talented photographer and friend whose way with the lens is as raw as it is honest.

As a fan of claymation dating back to the greats like pengu or wallace & gromit, i’d always dreamed of making something in that vein. Step in runway’s image to image feature and we were off to the races.

I was immediately drawn to crafting something for a song from my third album Legacy: “Baba, I Understand”. The track, produced by and featuring anatu, explores the bond a son has with his father, the tests it might endure, and the understanding borne of living in that fathers shoes as we age.

Now for my third collaborator(s) to step in: Runway.

40H TO GO: First Tests, First Failures

Midnight approached as I completed my first tests. The results were… disappointing. I had actually started down the road towards making two videos, one for a song called STRUT but whilst some of shots were awesome, it wasn’t gelling. Meanwhile BABA languished. Eyes too big for my stomach, not for the first time.

A decision had to be made, but it was a tough one.

I posted my attempts to the Gen48 Discord channel, where participants from around the world were sharing their own works-in-progress. Within minutes, suggestions came flooding in.

This wasn’t competition — it was collaboration across time zones and continents. A filmmaker from Seoul suggested prompt structures that had worked for her experiments. An animator from Buenos Aires shared screenshots of his settings. We were cooking! Time to focus.

20H TO GO: The Breakthrough

With a busy weekend of unavoidable distraction, I was forced away from the project. BUT upon return was able to dive fully into Runway’s unreleased references feature. For anyone who’s worked with AI generation, consistency between frames has always been the most monumental challenge. Characters have invariably morphed subtly from one scene to the next, regardless of what prompt engineering or seed tinkering you tried!

This new feature was something we were the first to access and allowed me to set specific reference images that the AI would use as anchors throughout the generation process. Suddenly, my claymation self maintained consistent features across different expressions and scenes!!

5H TO GO: Breaking Point

Every creative project has a moment where you question everything. Mine came as I hit the 5-hour mark remaining. Eyes burning from screen time, I had gone to sleep then had to work on some other stuff. Now we were down to the wire. Sole focus: BABA.

So many iterations to get just the right shot. How long this must have taken over the ages. Was this actually any good? Did it capture what I wanted to express about the song? Was I just creating a novelty rather than something meaningful? Didn't matter: how insanely fun this is. The most magnificent saving grace — the ability to easily start again, try something off the wall, learn about different camera shots & angles; dynamics and lighting effects; character movements and framing.

Each generation propelling you up, forward, onward!

I stepped away from the computer. It wasn’t just about the technical understanding of claymation, or cinematography here — it was about telling a story that might otherwise go unheard, bringing a unique perspective to life — mine/ours — that would otherwise remain unseen.

2H TO GO: Final Push

It was coming together. Time to make final adjustments. The claymation figures now moved with intention through scenes that paralleled the emotional journey of the song. The faces — my face — conveyed subtle emotions across three generations that somehow felt more authentic in clay than I may even have managed to capture attempting a music video.

The submissions were streaming in from across the world. Someone in Cali stayed up all night and got through over 1k generations. A designer in India suggested color grading adjustments that worked for him, whilst a Japan team delivered some absolute wizardry — man people are talented.

Submission: Reflection

With minutes to spare, I uploaded my finished video to the Gen48 submission portal. The music anatu and I had created was given new life. In way less than 48 hours, I had created something that would have been utterly impossible just a few years ago. Not just technically, but conceptually impossible — I wouldn’t have even dreamed of attempting it.

The claymation music video for “baba, i understand” wasn’t perfect. It had quirks and imperfections — e.g. I had to chop half the song out due to lack of time! — but somehow those add to its charm. More importantly, it felt like mine — expressing something deeply personal about the music in a manner that feels refreshing.

I can’t help but think about what this meant for storytellers everywhere.

When tools this powerful become to anyone with an internet connection, voices of all shapes and sizes have new ways to be heard. S that might never have been visualised can now exist and find their audience, make a difference in people’s lives.

For a boy who grew up marvelling at incredible films and animation — never imagining he could create one himself, this wasn’t just a competition entry — it was a glimpse of a future where creativity isn’t limited by geography, economics, or access. The future isn’t just about technology — it’s about who gets to tell their stories. And if my 48-hour journey with Runway ML is any indication, we’re about to hear many more from voices we’ve never heard before. How rad is that.

What’s your story? Whether you’re a seasoned creative or someone who’s always watched from the sidelines, innumerable tools are available to you. Start small, experiment boldly, and share your unique perspective. Join platforms like Runway’s createathons, find your community, and dont be afraid to ask for help along the way. The barriers are falling — all that’s left is to begin. I can’t wait to see what you create. Feel free to reach out, my contacts are below.

Read More

Papa is an artist, musician and writer.

Music On Chain — Papa

Songcamp — Papa

Connect

Farcaster @papa — https://warpcast.com/papa

Lens @papajams — https://hey.xyz/u/papajams

Twitter @papajimjams — https://twitter.com/papajimjams

288

288

Discover the magic of creativity unleashed as@papa shares insights from an exhilarating 48-hour createathon with Runway, where barriers were broken and personal stories flourished. An inspiring testament that technology empowers everyone to embrace their artistic voice—regardless of background or access.